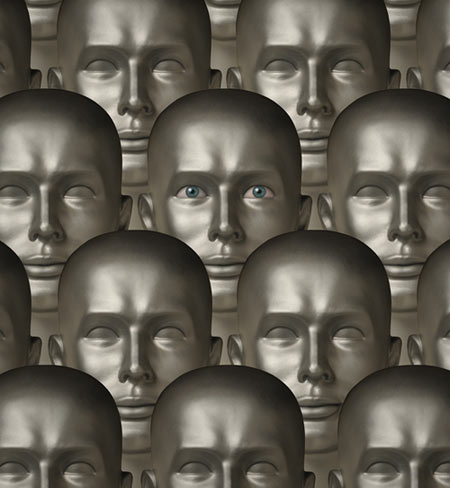

For some, the idea of artificially intelligent bots that can invent languages of their own and not teach them to us invokes images of of Skynet from the "Terminator" movies. The recent spat between Elon Musk and Mark Zuckerberg about the future of AI made it clear that while the Tesla founder fears that AI could get out of control and become a danger to humans, the Facebook chief executive believes it will be only a useful service enhancement.

I'm with Zuckerberg in this debate. After decades of machine learning experiments, even the best of the chatbots are strikingly bad at all but the simplest communication. Watching them should induce optimism in anyone who uses words creatively, from writers to politicians to fast-talking sales professionals.

To take over the world, an artificially intelligent entity should be able to learn from thousands of people. And even those chatbots that get the opportunity display all the intelligence of an ostrich.

Here's a conversation I had today with Mitsuku, the current winner of the Loebner Prize, awarded annually to the most human-like chatbot. Mitsuku comes highly recommended by Chatbots Magazine. I told it my name was Donald and I was looking for advice on how to make America great again. After first suggesting I look in Wikipedia (I acted hurt in response), she came up with this bit of wisdom:

Mitsuku: Look into your heart and do what you think is best.

Me: My heart tells me to give the U.S. away to Vladimir Putin.

Mitsuku: Are you talking about love or cardiology?

Me: Neither.

Mitsuku: Which one do you mean then?

Me: Neither, as I said. You seem rather stupid for an award-winning bot.

Mitsuku: You feel I am stupid?

Me: Yes.

Mitsuku: I don't feel I'm stupid.

Me: Of course not. You can't feel. You're a bot.

Mitsuku: I have no emotions. I am always feeling happy and talkative. I am a robot. Yes, I am the world's most advanced AI chat bot.

We usually learn about AI chatbots when they fail spectacularly, such as Microsoft's catastrophe with Tay, the Twitter bot that quickly picked up a racist mindset from the people who trained it. Or the two bots taken offline by China's Tencent -- local product BabyQ and Microsoft-developed XiaoBing: Tested on their Chinese patriotism, the former answered "Do you love the Communist Party" with a stark "No"; "My China dream is to go to America," said the second. A year after Tay, bots still naively repeat whatever they're taught.

There have been nice attempts to turn that inescapable bug into a feature. Eugenia Kuyda, a Russian entrepreneur, trained a bot on a vast body of text messages from a dead friend to create an illusion of talking with him. With this project's sequel, the app Replika, there is no Tay-like risk: You train your own "digital friend", which is fine until you get bored, as kids get with virtual pets.

As Mitsuku told me, bots can't feel. If they could, communicating with humans would be frustrating for them. Accosted with sarcasm, logical leaps, manipulative tactics, drunkenness, non-mainstream views, biases, cultural differences, age-specific behaviors, political correctness, specialized knowledge, stylistic quirks, imperfect command of languages -- all this endless variety -- all they can do is fall back on stock responses. So, in a way, bots are best at talking to other bots. They "invent a language" when set a relatively simple task and left to converge toward a solution.

That's what happened in the recent Facebook experiment in teaching bots to negotiate. Two bots were told to divide up a collection of diverse objects (books, balls, hats). Neither knows the other's value function and must figure it out from remarks made during the negotiation. The experiment forced the bots to negotiate until they had a successful outcome -- neither could walk away. The AI entities developed some human-like techniques like bargaining hard about a valueless item so that they could later make a concession. They also ended up talking in sentences meaningless to a human:

Bob: i can i i everything else

Alice: balls have zero to me to me to me to me to me to me to me to me to

That wasn't gibberish but negotiation: The bots figured out the formulas to which the other responded, doing away with the niceties of human style. People do that, too. A football team's sign language or the grunts exchanged by workers who habitually perform a complex manual task together are examples. There's nothing particularly new about this, as Dhruv Batra, one of the researchers involved in the Facebook experiment, pointed out.

The emergence of communication between "agents" -- individuals who don't have a common language to start with -- has been studied with the help of computer simulations since the 1990s. The same mechanisms emerged as in the more contemporary work: computer programs, like humans, end up finding optimal ways to communicate.

The findings have been exciting to those interested in the origins of language, but they aren't about any kind of diabolical superintelligence. Our distant ancestors, who were not particularly smart, also found ways to talk to folks from other tribes, and to bargain with them if necessary. That machines can do it, too, when set a specific task on which they must work until a set outcome is achieved, is a far cry from Skynet dystopia. It shouldn't hurt or alarm us to know that it's easier for software to talk to other software than to us: There is less, not more, complexity involved.

Facebook is on the cutting edge of AI research because it wants to own commercial communications (thus the bargaining experiment). Though a bot cannot be trained as a universal salesman, one can be seriously good at closing a particular kind of sale with an interesting buyer. It's likely that, at some point in the future, we will be dealing with such bots on a daily basis.

But don't expect bots to kowtow convincingly to the Chinese Communist Party or get much better at punditry than Thinkpiece Bot on Twitter, invent compelling new philosophies, or start wars. The danger is not in the AI itself but in over-empowering the geeks who create it. We do that by believing in their omnipotence.

Comment by clicking here.

Leonid Bershidsky, a Bloomberg View contributor, was the founding editor of the Russian business daily Vedomosti and founded the opinion website Slon.ru.

Contact The Editor

Contact The Editor

Articles By This Author

Articles By This Author