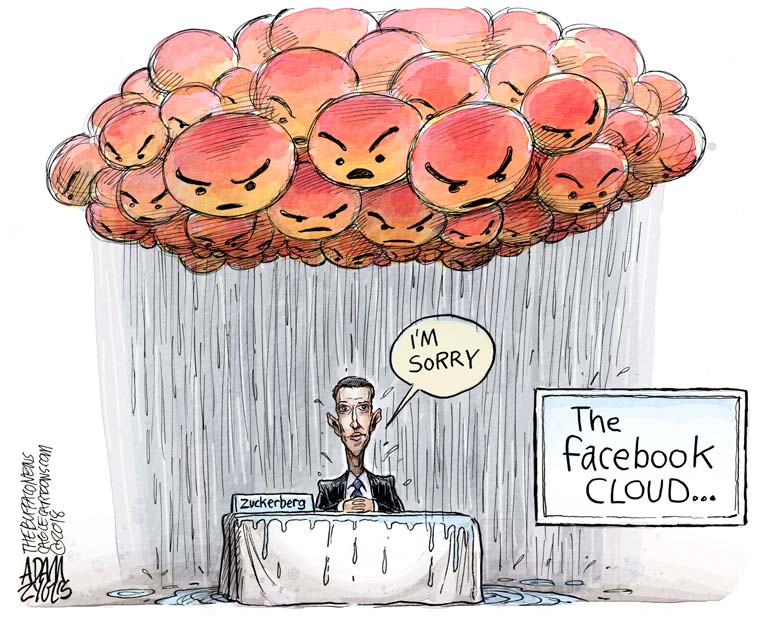

To follow Mark Zuckerberg's utterances is to constantly cringe.

Last week was no different. The Facebook founder - fresh off his failed fake-news apology tour - seemed to give support to Holocaust deniers.

"I don't think that they're intentionally getting it wrong," was the money quote in Kara Swisher's Recode interview with the world's richest Harvard dropout - raising the question of whether Zuckerberg is in a secret race to the bottom with fellow billionaires Elon Musk and Peter Thiel.

Condemnation justifiably followed.

Jonathan Greenblatt, chief executive of the Anti-Defamation League, put it bluntly to CNN: "Holocaust denial is a willful, deliberate and long-standing deception tactic by anti-Semites that is incontrovertibly hateful, hurtful, and threatening to Jews. Facebook has a moral and ethical obligation not to allow its dissemination."

Greenblatt, of course, has the high ground here.

And Facebook has done so much wrong - including allowing the global platform to be misused in ways that threaten the world order - that Zuckerberg has earned not an inch of slack.

(One example close to my heart: The company recently began treating news articles on political topics as if they were political advertising, dangerously blurring the line between propaganda and news - an industry to which Facebook already has done irreparable harm.)

But the issue of what to ban on Facebook - and how - is tricky, especially to those who believe deeply in free speech.

"One of the problems with defending free speech is you often have to defend people that you find to be outrageous and unpleasant and disgusting," author Salman Rushdie once observed.

The renowned First Amendment lawyer Floyd Abrams told me last week that Facebook talks out of both sides of its mouth when it comes to limiting what's allowed.

"They say 'we don't do editing' but they do make choices," Abrams said. And they must. But in his estimation, "the public is served best when they lean heavily toward allowing speech."

He elaborated: Facebook "can and should say no to certain categories of speech, including that which is overtly racist. But you'd better not start barring Charles Murray." (Murray is the conservative political scientist who argued controversially that government spending on welfare is largely wasted.)

I've believed for a long time that Facebook needs to cop to the reality that it is a media company, not just a neutral platform. And to act accordingly, with large numbers of editors - yes, editors! - in place to make intelligent decisions about removing dangerous disinformation and unacceptable speech, as these issues arise.

"Maybe there needs to be a job called, like, Platform Editor, where someone works to not only stop manipulation but also works across the security team and the content team and in between the different business verticals to ensure the quality and integrity of the platform," suggested Jonathan Albright, a leading thinker on tech issues, in a Nieman Lab interview early this year.

Albright added, referring to one particularly venal example of bad information amplified by a platform's algorithms: "I mean: 4chan trending on Google during the Las Vegas shooting? How that even happened I have no idea, but I do know that one person could have stopped that."

But to stop that, the tech giants need to take responsibility for truly dangerous speech, without inhibiting legitimate free speech. And this isn't always a black-and-white choice.

Facebook amplifies some things. It hides others, and labels still others.

"Facebook is a highly curated space," noted Jameel Jaffer, director of the Knight First Amendment Institute at Columbia University.

But, he told me last week, it's unrealistic to ask Facebook to "police its space for false speech," given its 2 billion users.

So Zuckerberg was correct, in a narrow sense, when he told Swisher, "I just don't think that it is the right thing to say, 'We're going to take someone off the platform if they get things wrong, even multiple times.' "

But that doesn't mean the approach should be to continue business as usual while giving lip service to improvements.

Facebook's algorithms decide who sees what, how prominently, how quickly it's shared, how something is labeled - these are what Jaffer would call "curatorial judgments."

I'd take it a step further and call them editorial decisions.

Whatever you call them, they matter. A lot.

Yair Rosenberg suggested, in the Atlantic, one possibility for dealing with a prominent Holocaust-denying Facebook account: Aggressive counterprogramming that promotes truth.

"Imagine if instead of taking it down, Facebook appended a prominent disclaimer atop the page," he wrote.

Something like this: "This page promotes the denial of the Holocaust, the systematic 20th-century attempt to exterminate the Jewish people which left 6 million of them dead, alongside millions of political dissidents, LGBT people, and others the Nazis considered undesirable. To learn more about this history and not be misled by propaganda, visit these links to our partners at the United States Holocaust Museum and Israel's Yad Vashem."

More simply, Facebook could recognize that Holocaust denialism is hate speech, and forbid it on those grounds.

But widespread censorship and chasing down every falsehood ought to be acknowledged as bad ideas. Vera Eidelman, an American Civil Liberties Union fellow, rightly noted last week that already marginalized voices - activists of color, for example - are likely to be silenced first if Facebook expands its censorship powers. Zuckerberg got that much right, though in a remarkably misguided way.

Meanwhile, his company remains a dangerous mess.

In treating what ails Facebook, the cure shouldn't be worse than the disease.

Previously:

• 06/11/18: Shocked by Trump aggression against reporters and sources? The blueprint was made by Obama

• 05/14/18: Symptoms at NBC hint at a larger sickness

• 10/18/17: From the pen of an 18-year-old Harvey Weinstein: An aggressor who won't be refused

• 01/12/17: BuzzFeed crossed the line in publishing salacious 'dossier' on Trump

• 11/09/16: The media didn't want to believe Trump could win. So they looked the other way

• 11/02/16: Nate Silver blew it when he missed Trump --- now he really needs to get it right

• 10/20/16: How the Committee to Protect Journalists broke its own rule to protest Trump

Contact The Editor

Contact The Editor

Articles By This Author

Articles By This Author